glibc下free实现原理

2022-12-16

8 min read

_int_free函数

free函数也和malloc一样,只是经过包装后的,最主要的处理逻辑还是在_int_free函数当中,主要是__libc_free函数调用的_int_free函数

__libc_free (void *mem)

{

mstate ar_ptr;

mchunkptr p; /* chunk corresponding to mem */

void (*hook) (void *, const void *)

= atomic_forced_read (__free_hook);

if (__builtin_expect (hook != NULL, 0))

{

(*hook)(mem, RETURN_ADDRESS (0));

return;

}

if (mem == 0) /* free(0) has no effect */

return;

p = mem2chunk (mem);

if (chunk_is_mmapped (p)) /* release mmapped memory. */

{

/* see if the dynamic brk/mmap threshold needs adjusting */

if (!mp_.no_dyn_threshold

&& p->size > mp_.mmap_threshold

&& p->size <= DEFAULT_MMAP_THRESHOLD_MAX)

{

mp_.mmap_threshold = chunksize (p);

mp_.trim_threshold = 2 * mp_.mmap_threshold;

LIBC_PROBE (memory_mallopt_free_dyn_thresholds, 2,

mp_.mmap_threshold, mp_.trim_threshold);

}

munmap_chunk (p);

return;

}

ar_ptr = arena_for_chunk (p);

_int_free (ar_ptr, p, 0);

}

变量定义和初始化

static void

_int_free (mstate av, mchunkptr p, int have_lock)

{

INTERNAL_SIZE_T size; /* its size */

mfastbinptr *fb; /* associated fastbin */

mchunkptr nextchunk; /* next contiguous chunk */

INTERNAL_SIZE_T nextsize; /* its size */

int nextinuse; /* true if nextchunk is used */

INTERNAL_SIZE_T prevsize; /* size of previous contiguous chunk */

mchunkptr bck; /* misc temp for linking */

mchunkptr fwd; /* misc temp for linking */

const char *errstr = NULL;

int locked = 0;

size = chunksize (p);

这些在上篇差不多都见过了,bck和fwd就是个中间变量,size就是chunk的大小,等等这些就不说了。

基础检查

/* Little security check which won't hurt performance: the

allocator never wrapps around at the end of the address space.

Therefore we can exclude some size values which might appear

here by accident or by "design" from some intruder. */

if (__builtin_expect ((uintptr_t) p > (uintptr_t) -size, 0)

|| __builtin_expect (misaligned_chunk (p), 0))

{

errstr = "free(): invalid pointer";

errout:

if (!have_lock && locked)

(void) mutex_unlock (&av->mutex);

malloc_printerr (check_action, errstr, chunk2mem (p), av);

return;

}

/* We know that each chunk is at least MINSIZE bytes in size or a

multiple of MALLOC_ALIGNMENT. */

if (__glibc_unlikely (size < MINSIZE || !aligned_OK (size)))

{

errstr = "free(): invalid size";

goto errout;

}

check_inuse_chunk(av, p);

需要满足以下几个条件

- p>-size 这里我还是不是很懂

- size>MINSIZE 这里需要chunk的大小大于最小值

- aligned_OK (size)的结果为true,这里主要是满足每个chunk的size是MALLOC_ALIGNMENT的整数倍

尝试把 chunk放入fastbins

if ((unsigned long)(size) <= (unsigned long)(get_max_fast ())

#if TRIM_FASTBINS

/*

If TRIM_FASTBINS set, don't place chunks

bordering top into fastbins

*/

&& (chunk_at_offset(p, size) != av->top)

#endif

) {

if (__builtin_expect (chunk_at_offset (p, size)->size <= 2 * SIZE_SZ, 0)

|| __builtin_expect (chunksize (chunk_at_offset (p, size))

>= av->system_mem, 0))

{

/* We might not have a lock at this point and concurrent modifications

of system_mem might have let to a false positive. Redo the test

after getting the lock. */

if (have_lock

|| ({ assert (locked == 0);

mutex_lock(&av->mutex);

locked = 1;

chunk_at_offset (p, size)->size <= 2 * SIZE_SZ

|| chunksize (chunk_at_offset (p, size)) >= av->system_mem;

}))

{

errstr = "free(): invalid next size (fast)";

goto errout;

}

if (! have_lock)

{

(void)mutex_unlock(&av->mutex);

locked = 0;

}

}

free_perturb (chunk2mem(p), size - 2 * SIZE_SZ);

set_fastchunks(av);

//获取被释放的chunk的下标

unsigned int idx = fastbin_index(size);

//获取被释放的chunk的指针

fb = &fastbin (av, idx);

/* Atomically link P to its fastbin: P->FD = *FB; *FB = P; */

//定义了两个变量,一个初始化了

mchunkptr old = *fb, old2;

unsigned int old_idx = ~0u;

do

{

/* Check that the top of the bin is not the record we are going to add

(i.e., double free). */

if (__builtin_expect (old == p, 0))

{

errstr = "double free or corruption (fasttop)";

goto errout;

}

/* Check that size of fastbin chunk at the top is the same as

size of the chunk that we are adding. We can dereference OLD

only if we have the lock, otherwise it might have already been

deallocated. See use of OLD_IDX below for the actual check. */

if (have_lock && old != NULL)

old_idx = fastbin_index(chunksize(old));

p->fd = old2 = old;

}

while ((old = catomic_compare_and_exchange_val_rel (fb, p, old2)) != old2);

if (have_lock && old != NULL && __builtin_expect (old_idx != idx, 0))

{

errstr = "invalid fastbin entry (free)";

goto errout;

}

}

在free_perturb (chunk2mem(p), size - 2 * SIZE_SZ);也就是37行前都是进行一些检查,然后在下面是把chunk放入fastbins,对于set_fastchunks,宏定义为#define set_fastchunks(M) catomic_and(&(M)->flags, ~FASTCHUNKS_BIT),就是让malloc_state中的flag中标记是否含有fastbin的标志位为0。然后就是一个do-while循环,这个do-while循环在之前见过差不多的,用到了catomic_compare_and_exchange_val_rel宏函数.

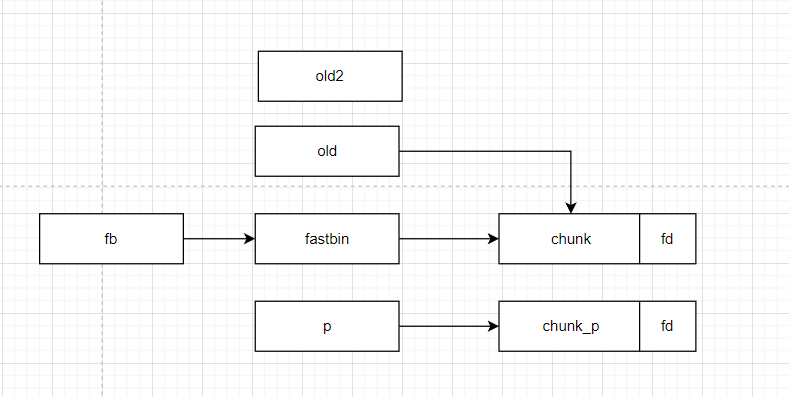

执行do前

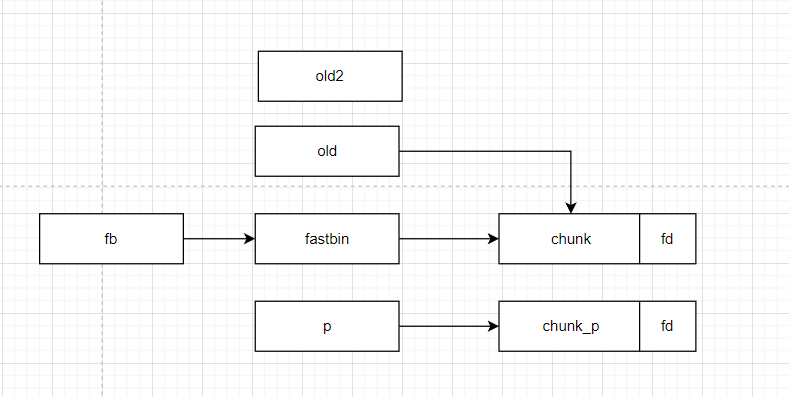

执行do后,还没执行while

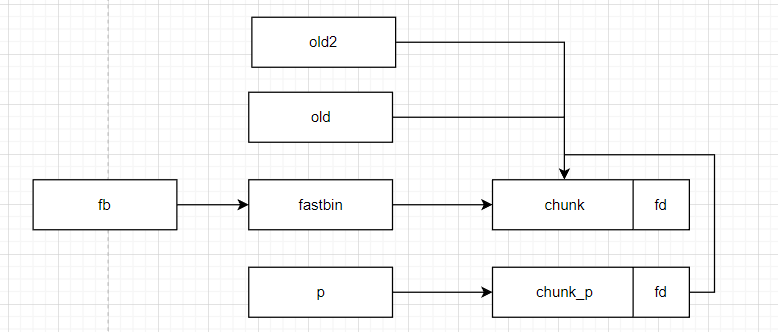

执行while后

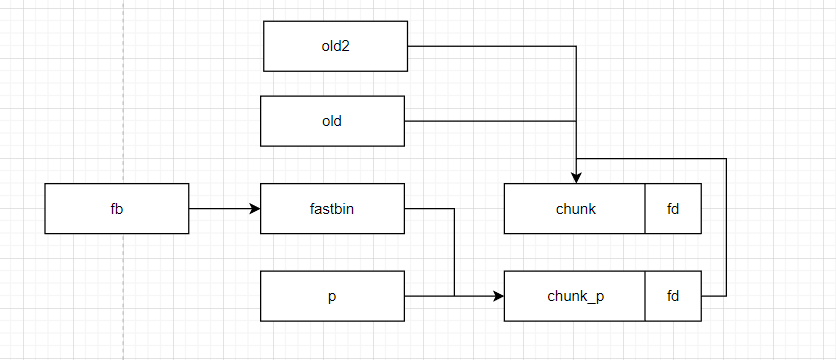

此时chunk_p就已经放到了fastbin当中了

合并相邻空闲chunk

先做一些必要的检查

else if (!chunk_is_mmapped(p)) {

if (! have_lock) {

(void)mutex_lock(&av->mutex);

locked = 1;

}

nextchunk = chunk_at_offset(p, size);

/* Lightweight tests: check whether the block is already the

top block. */

if (__glibc_unlikely (p == av->top))

{

errstr = "double free or corruption (top)";

goto errout;

}

/* Or whether the next chunk is beyond the boundaries of the arena. */

if (__builtin_expect (contiguous (av)

&& (char *) nextchunk

>= ((char *) av->top + chunksize(av->top)), 0))

{

errstr = "double free or corruption (out)";

goto errout;

}

/* Or whether the block is actually not marked used. */

if (__glibc_unlikely (!prev_inuse(nextchunk)))

{

errstr = "double free or corruption (!prev)";

goto errout;

}

nextsize = chunksize(nextchunk);

if (__builtin_expect (nextchunk->size <= 2 * SIZE_SZ, 0)

|| __builtin_expect (nextsize >= av->system_mem, 0))

{

errstr = "free(): invalid next size (normal)";

goto errout;

}

free_perturb (chunk2mem(p), size - 2 * SIZE_SZ);

进行合并

/* consolidate backward */

if (!prev_inuse(p)) {

prevsize = p->prev_size;

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

unlink(av, p, bck, fwd);

}

if (nextchunk != av->top) {

/* get and clear inuse bit */

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

/* consolidate forward */

if (!nextinuse) {

unlink(av, nextchunk, bck, fwd);

size += nextsize;

} else

clear_inuse_bit_at_offset(nextchunk, 0);

/*

Place the chunk in unsorted chunk list. Chunks are

not placed into regular bins until after they have

been given one chance to be used in malloc.

*/

bck = unsorted_chunks(av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

{

errstr = "free(): corrupted unsorted chunks";

goto errout;

}

p->fd = fwd;

p->bk = bck;

if (!in_smallbin_range(size))

{

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

bck->fd = p;

fwd->bk = p;

set_head(p, size | PREV_INUSE);

set_foot(p, size);

check_free_chunk(av, p);

}

- 如果前面有个chunk是free的,合并这个chunk

- 如果下一个chunk是topchunk.直接并入

- 如果下个chunk不是topchunk并且不在使用,会进行合并,清空使用状态

- 如果下个chunk是topchunk,并且正在使用,会放入到unsorted bin当中

返还内存

/*

If freeing a large space, consolidate possibly-surrounding

chunks. Then, if the total unused topmost memory exceeds trim

threshold, ask malloc_trim to reduce top.

Unless max_fast is 0, we don't know if there are fastbins

bordering top, so we cannot tell for sure whether threshold

has been reached unless fastbins are consolidated. But we

don't want to consolidate on each free. As a compromise,

consolidation is performed if FASTBIN_CONSOLIDATION_THRESHOLD

is reached.

*/

//size大于FASTBIN_CONSOLIDATION_THRESHOLD 向系统返还内存

if ((unsigned long)(size) >= FASTBIN_CONSOLIDATION_THRESHOLD) {

if (have_fastchunks(av))

malloc_consolidate(av);

if (av == &main_arena) {

#ifndef MORECORE_CANNOT_TRIM

if ((unsigned long)(chunksize(av->top)) >=

(unsigned long)(mp_.trim_threshold))

systrim(mp_.top_pad, av);

#endif

} else {

/* Always try heap_trim(), even if the top chunk is not

large, because the corresponding heap might go away. */

heap_info *heap = heap_for_ptr(top(av));

assert(heap->ar_ptr == av);

heap_trim(heap, mp_.top_pad);

}

}

if (! have_lock) {

assert (locked);

(void)mutex_unlock(&av->mutex);

}

}

/*

If the chunk was allocated via mmap, release via munmap().

*/

else {

//释放mmap的chunk

munmap_chunk (p);

}

}